PS Vita Homebrew (post mortem)

Jun 07, 2021

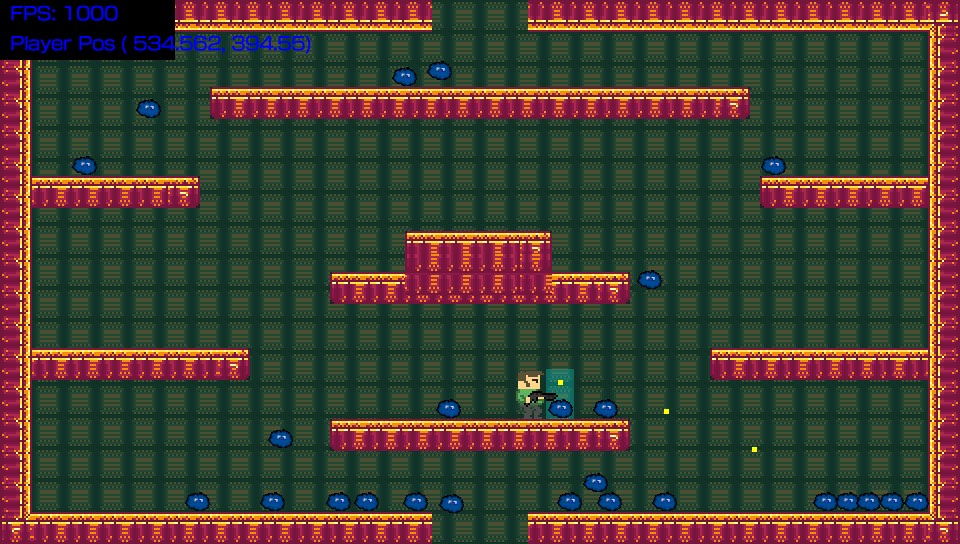

screenshot of PC version with working UI

Between late April and early June 2021, I began working on a homebrew game for the Playstation Vita. Scroll to the bottom of the page if you just want to see the game and don't care about the boring technical parts!

A group of Vita homebrew enthusiasts organized a 2 month long game development competition for the Playstation Vita shortly after Sony announced (and later back-pedaled on) their plans to shut down the Playstation store on the Vita. The community crowdsourced a nice little cash prize of just under $2000 to be distributed to the winners.

I'll admit that I'm not super knowledgeable about or experienced with reverse engineering, but luckily it turns out that developing homebrew for the Vita isn't all that difficult. This is thanks to a surprisingly high quality and well-maintained SDK/toolchain that some really smart people managed to piece together through their reverse engineering efforts.

My background with Vita homebrew

My involvement with the Vita homebrew world started around 2016 (or whenever Henkaku was first released). I happened to have a Vita at the time which I wasn't using much, so seeing that I could actually start developing games on it got me super excited, and I dedicated a few months to playing around with it. I put together a tiny little "engine" held together with Makefiles and duct-tape, and built a basic 2D platformer/shooter vaguely reminiscent of Super Crate Box. I never finished or released that because, well, it didn't seem like it was worth the effort to finish a game that only a small subset of people who owned one of the worst-selling Playstation consoles of all time might be able to play.

But that codebase was there, and it worked reasonably well. Over the years, I'd go back to it and play around a bit, sometimes out of boredom, sometimes to practice/learn new things. For example, one of the first improvements I did was to port the game to PC using SDL2. That way, I could build games with it that run on both PC and Vita using the same codebase. This was accomplished with more Makefiles and duct tape.

Later on, I got very interested in build systems, and how much of an impact they have on productivity, software architecture, and pretty much everything. So I decided to study them, tried out a few, and eventually tried building my own. In order to make a build system, I needed something to build! So naturally I decided to use that Vita/PC game engine I had, because it presented some non-trivial build challenges.

My custom build system worked, and I had the game successfully building for both platforms using it. It even supported distributed builds across multiple computers! But, like everyone who has ever tried to make their own build system eventually learns, it's a much much much harder project than it seems at first, second, third, and even the 100th glance (well after you've built your own). There are so many edge cases, so many potential ways for it to fail, so many real-world situations that you can't possibly anticipate that it makes designing a good build system extremely difficult. And to make things worse, a broken build system is a show-stopper for any project, so any fixes to it usually come in the form of unsustainable short-term hacks.

Long story short, I scrapped my custom build system and decided to find a new one. I already had experience with a couple of different ones, and having built my own gave me a useful perspective when evaluating others. I guess you could say that I'm a build system snob.

Eventually, I settled on a build system called Waf. I won't get into the details here, but I'll just say that Waf is one if the best-designed pieces of software I've ever used. It has an elegant answer to seemingly every single possible edge case a build system can run into, and it's very easy to work with (once you get past the brutal learning curve).

Anyways, throughout all of this build system experimentation, my crappy PS Vita game engine was a guinea pig. It turns out that investing in a solid build system has a huge impact on the long-term quality, maintainability, and productivity of a codebase, even after tons of refactoring. Once I had settled on Waf, I was able to play around with diffferent ways of organizing the project, including support for multiple backends. Today, the engine supports Vita, Windows, Mac, Linux, Android, and partial support for iOS (builds, but no renderer yet). It also supports various graphics APIs, including a simple 2D one based on SDL2, and a more advanced one powered by OpenGL ES.

In fact, I'm using that engine today for a mobile puzzle game I'm building. It's a real project built on a codebase that started as a crappy PS Vita homebrew experiment.

Entering the competition

The prize pool of this competition is pretty big by game dev competition standards (because it's greater than $0), but in truth that's not what motivated me to enter. The fact that I had this game engine which happened to support the Vita made it hard to resist, and since it ran for 2 months I could casually work on it with little stress.

But really the main motivation for me entering was that it gave me an excuse to add features to my engine. The main thing I wanted to do was add 3D rendering, because it originally started as a 2D-only engine using a library called libvita2d. I've been wanting to add 3D support to my GPU/hardware abstraction layer ever since I created the OpenGL ES renderer, but doing so would've meant abandoning Vita support, since there is no "libvita3d", and I don't even have a way to compile shaders!

Sure, the Vita isn't exactly the most important hardware to target in 2021, but considering how long it's been a part of this engine of mine, I didn't have the heart to get rid of it. Plus, the mobile game I'm building doesn't need 3D graphics. Luckily, this competition gave me the kick in the butt I needed to finally do it, and an excuse to take a short break from my mobile game. So even if I don't win the compeition, I still end up with a net positive thanks to the improvements to the engine!

Adding 3D graphics support

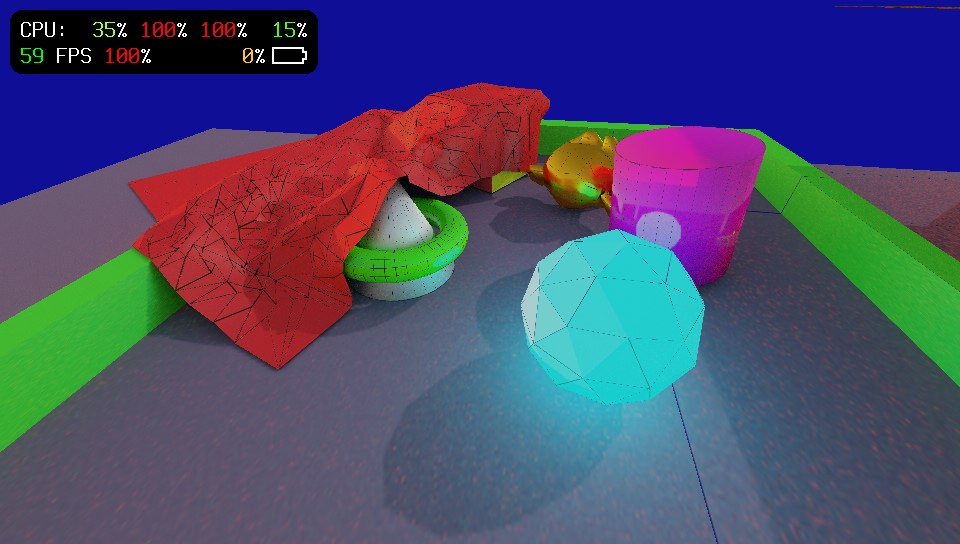

The black lines are due to poor texture mapping from my scene exporter, as it's just blindly using the Smart UV Project operator in Blender

Part of the challenge for me was that my graphics API was designed to model the libvita2d and SDL2 drawing APIs, which were the only things it supported back when I wrote it. When I added an OpenGL-based renderer, I created another API which was still mostly for 2D, but also supported arbitrary mesh rendering. With this, I implemented the old API ontop of the new one and got rid of SDL2.

Adding 3D support to the OpenGL-based API is straightforward, but doing it on the Vita is less so. Without a shader compiler, I can't compile shaders! Luckily, libvita2d ships with its own pre-compiled shaders. They're extremely basic, but they're generic enough for me to hijack them for my own purposes. All I had to do was replace the world-view-projection matrix with my own and boom: instant 3D graphics. But because the libvita2d shaders are extremely basic, there's no way for me to add dynamic lighting to my game. So instead, I decided to go with 100% baked lighting.

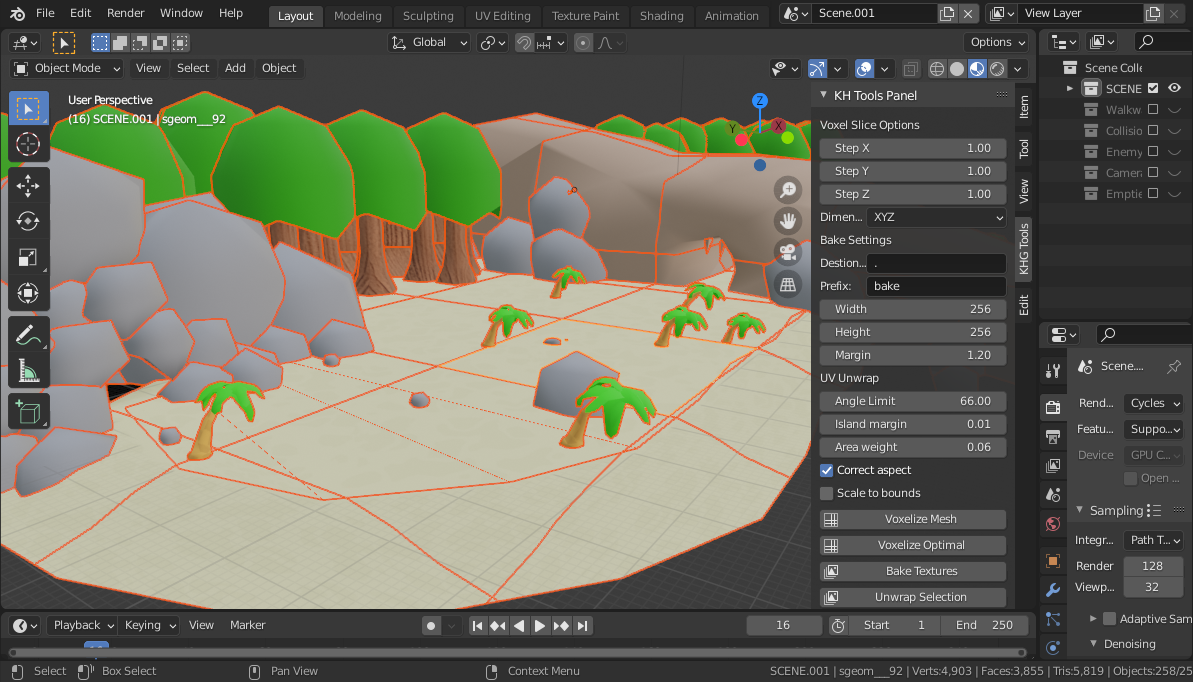

To accomplish this, I wrote a Blender plugin that slices and merges the world geometry into chunks as part of the export process. That way, I can bake the lighting of each chunk into a single texture. This is important because the libvita2d shaders only allow me to render unlit textured meshes, so baking the lighting into textures is the only option.

As soon as I did this, I very quickly ran into the limits of Vita's hardware. Making the chunks of the world small helps improve quality, but requires more vertices. Making the chunks too big causes the textures to be blurry unless I increase the resolution. Increasing the resolution is difficult because the Vita only has 128mb of VRAM. It's all a careful balancing act!

My solution to alleviate this problem was to turn to compressed textures. Since GPUs can't read PNGs, you need to decompress them first. The raw uncompressed pixel data for an image is HUGE. A 4bpp 512x512 RGBA texture is around 1mb of data! The way to reduce this is to send the pixels in a compressed format that the GPU can decompress as part of its pipeline. This is what compressed textures are for, but unfortunately not all hardware supports all formats. The Vita for example has a PowerVR GPU, so it supports PowerVR textures (and some others), but since my engine is portable, I don't want to have to worry about texture formats when adding assets to a project.

Using compressed textures

Scene used to test textures, geometry loading, and octree implementation

Luckily, there is a solution to this problem from a company called Binomial. They released an open source format called "Basis Universal". The key feature is that it can be very quickly transcoded (converted) into many other compressed texture formats. You could have the transcoder run as part of your build process when you're building for a single hardware target with known texture support (like Vita!), or you could ship the basis textures and include the transcoder in your engine, so that they're transcoded at installation time, or even at runtime!

I was curious to see the performance of the transcoder on Vita, so I went with the runtime transcoder route. Also, I didn't use .basis textures directly, instead I used a project from Khronos called libKtx. It's basically a container for basis (or other) texture formats, and comes with a C API along with tools for creating .ktx files.

Anyways, after a few weeks of pain (try debugging a GPU crash on a system like the Vita without any debugging tools) I got it to work! I didn't spend the time to do any formal benchmarks, but the performance of Basis runtime transcoding seems to be pretty damn good even on a Vita. For reference, the game I shipped loads over 250~280 textures (248 of those are 256x256 RGB for the world geometry), all of which are being transcoded into one of two PVRTC compressed texture formats (one for RGB, another for RGBA).

The load-decompress-transcode process happens asynchronously across two threads, with a gray placeholder used while a texture is still loading. This means that you can see how long it takes to load everything. It isn't instant by any means. The game shows a 5 second splash screen with the competition logo, and even after those 5 seconds you can still see some unloaded textures. But still, I think the performance is impressive all things considered, and if I were to add a proper loading screen I could reduce the time to load everything slightly by using 3 threads instead of 2.

I did add a priority system so that textures can be assigned a priorty when they're added to the load queue. That way, on-screen textures can be loaded first so that it at least seems like everything loaded. However, even though the system is in place, I didn't actually have time to test it and use it in the final game!

Of course, the proper thing would be to just do the transcoding offline, and ship the PVRTC textures that are ready to load. I did not have time to do this. However, it should be noted that shipping basis textures directly has an added benefit: the textures are FREAKING SMALL. libKtx's converter tool uses Facebook's ZSTD library to supercompress the basis textures. Doing this reduced the size of my textures for the scene from 19.7mb down to 2.3mb on disk!!! That's some impressive space savings, especially when you consider that those 19.7mb are PNG files that are already compressed, and, generally, GPU compressed texture formats do not compress as well on disk. I think this is due to the Basis Universal format compressing really well.

So thanks to this crazy compression, the game's final VPK is only 5.7mb! And even that is not fully optimized since there are some extra assets in there that aren't being used.

Compiling shaders

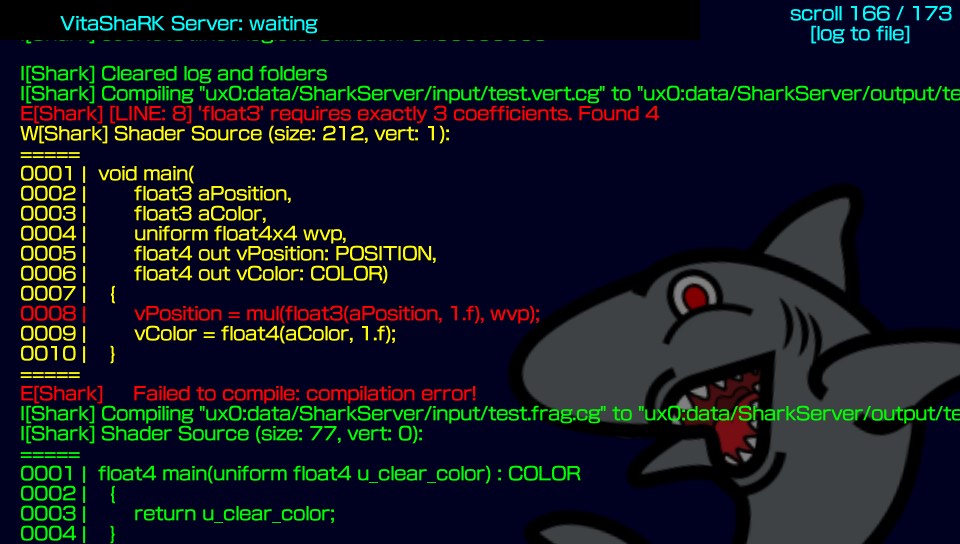

My unused, but fully functional Vita shader build server. The shark graphic is from the VitaShark project

I said that I didn't have a shader compiler earlier, but that's not entirely true. I don't know the full history of this, but at some point Sony had a program called Playstation Mobile or "PSM", which I think was like a indie publishing thing, kind of like what XNA was. Regardless, it required a special runtime to be installed on the console to run PSM games, and that runtime happens to include a shader compiler! (I guess Sony didn't want to give development tools to PSM developers?)

Anyways, someone created a library that lets you use that shader compiler. Unfortunately, since it's in the PSM runtime, it means you need to compile your shaders on an actual Vita. Compiling shaders at program start is nothing new, but I wanted to ship precompiled shaders.

So at some point during the development of this game, I decided to add a Vita shader compiler to my toolbelt. And I succeeded! Unfortunately, even though I did build it, I didn't get around to using it. My shipped game uses the same shaders from libvita2d.

But it's kind of cool how it works. I created a server application that you run on the Vita. When you want to compile a shader, you upload the shader sources into a folder on the Vita using FTP, then you issue a command to the server application to start the build. It will then output the results into another folder on the Vita, and send a response to the client indicating success/failure state. Once you get a "success" response, you can then download the compiled shaders using FTP.

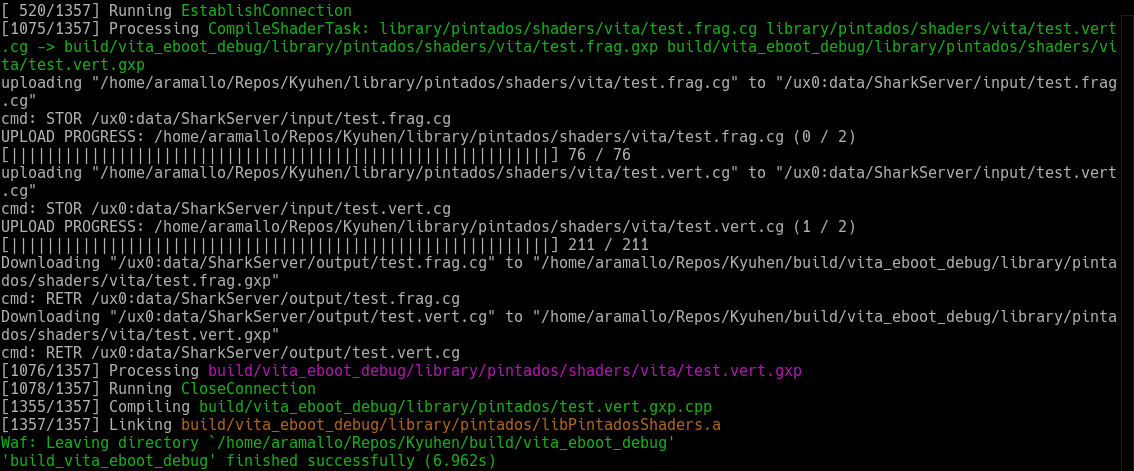

Thanks to the magic of Waf, I was able to integrate this cleanly into my build system. It all happens automatically and reliably. Basically, when I build with a flag like --shader-server:<IP:port>, the build system will create compilation tasks for the shader files in my source tree (and of course, it will only execute those tasks if the source files changed between builds; partial builds are a key feature of waf!). Then it will automatically handle uploading/talking to the server/downloading files.

Once it downloads the compiled shaders, it will write them to the build folder for further processing (like embedding it in the app binary), but it will also copy them to a special folder in the source tree. That way, I can continue to build my project even after I've shut down the server. Imagine doing something like this with CMake!

The most practical way to use this is to have the server running on a Vita TV while you do application development on another Vita. That way you can have the server application running at all times and not worry about it going to sleep every couple of minutes.

It all works pretty well, so it's a shame I didn't end up using it. Performance isn't great due to needing FTP, but there are some ways to optimize that, like using USB file transfers, or even a vm/emulator.

The Game

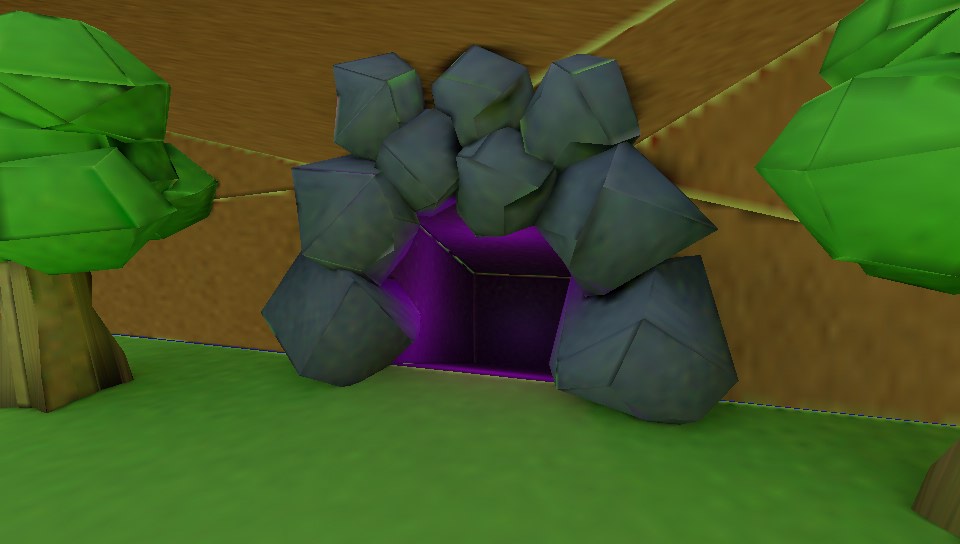

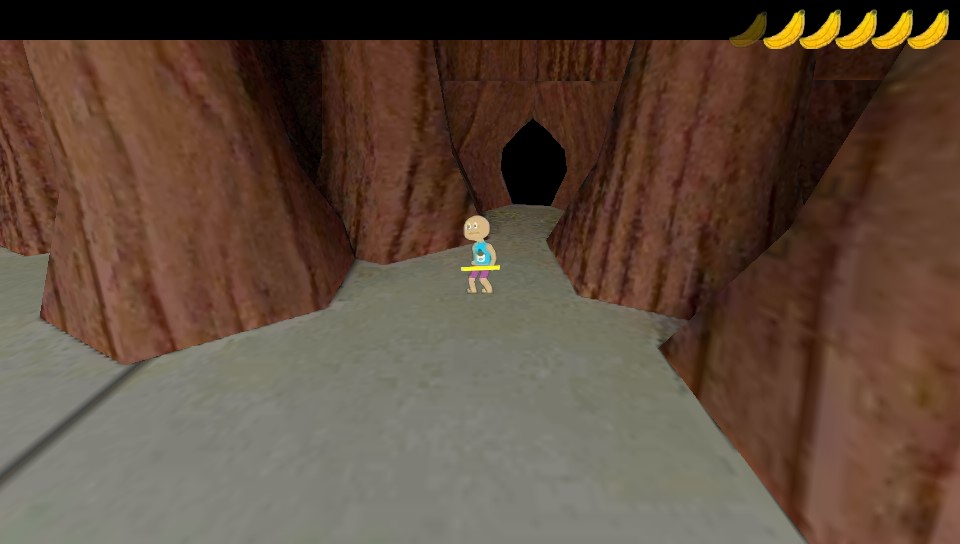

I didn't get around to fixing that texture wrapping issue

The game I wanted to make was a small action RPG with Paper Mario-style graphics, mainly because I did not have any kind of skeletal animation implemented, but I did have Spine integration working thanks to the mobile game I was making.

Unfortunately, since I spent the majority of the time on this project working on engine features, I didn't have enough time to build the game I originally wanted. In fact, all of the actual game code was written in about a week towards the end. I had so little time left that the final VPK still uses random placeholder icons/livearea graphic and a working title.

Another problem with the game is that, due to the time constraints, the textures look terrible. This is because towards the end, I was using compressed textures for everything, including the characters. Those formats do not produce good results for that type of art style, but I didn't have time to switch them out. Also, the environments look really grainy and blurry because I did not have time to do re-render of the scene in Blender, so I ended up having to ship the low-quality and low-res bake I was using during testing...there's also no audio :(

Render quality so low you can almost count the individual rays!

Luckily for me though, I did accomplish what I set out to do originally: add 3D rendering to my little engine, and I also got some nice basis/ktx textures with asynchronous loading, and an LOD-based streaming system. These are all things that will improve the "real" game I'm working on, and if it wasn't for this detour into homebrew, I probably would not have invested the time to work on them.

Overall it was a very fun project!

Future?

Will I complete this game in the future? Maybe. I will at the very least release an updated version that fixes some of the bugs, like the broken UI, transparency draw order, audio, invincible player/enemies, and maybe I'll improve the texture/visual quality. However, I am working on another project right now and don't plan on abandoning that to work on this.

But I have a lot of ideas (and many unused assets) that I came up with during the design phase for this that I think would be pretty interesting to explore. I think some of them are novel, and I personally have never seen them done before in this style of game. So I definitely want to try implementing them at some point, and what I have with this game is a decent starting point for that.

Follow me on Twitter to be notified when I release the updated version!

Downloads

-

Updated less buggy version (coming soon)

-

PC version (coming soon)

© Alejandro Ramallo 2025